I’m always hopeful, but at this point, I seriously doubt Russia’s sincerity. Past ceasefire agreements, for example, were broken by Russian attacks, usually within hours of the agreement taking effect.

I’m always hopeful, but at this point, I seriously doubt Russia’s sincerity. Past ceasefire agreements, for example, were broken by Russian attacks, usually within hours of the agreement taking effect.

That would (just like Git LFS) store full, separate copies of every single version of the large files I manage. I really, really don’t want to go there, nor do I have even a fraction of the hard drive space for that…

That’s what I meant when I wrote “Git submodules can only point to a whole different repository” - they can’t point to a path inside a repository, only to another repository root. That unfortunately renders them useless for me (I’d have to set up in the order of hundreds of small repositories for the sets of shared data I have).

I’m already using Git for source code related versioning, but some use cases involving large binary files with partial updates aren’t well covered by Git (I’ve gone into some detail in my reply to @vvv@programming.dev).

There’s also the lack of svn:externals in Git. Git submodules can only point to a whole different repository as far as I’m aware.

I’m already using Git, thus my experience with Gitea. I am well versed with svndumpfilter and git-svn to extract and migrate individual Subversion repositories to Git.

I’m not only hosting code, but I have several projects involving large binary files with binary changes. Git’s delta compression algorithm for binary files is so-so. Git LFS is just outsourcing the problem. Even cloning with --depth 1 --single-branch gives me abysmal performance compared to Subversion.

So I’m still looking for a nice WebUI to make my life with the Subversion repositories I have easier.

After finding out that tools that are to “bureaucratic” don’t stick with me (bureaucratic as in, I need to fill out forms to create projects/tasks, update them and follow defined workflows), I ended up with Trilium.

It at first looks like a very free-form note taking app (a tree of documents on the left, click and edit away), but it has a lot of extra functionality that lets you construct journals and tasks lists in the document tree (like its Task Manager which is already set up in the Demo notes of a new Trilium install).

When you have a bunch of computers networked, each of them is assigned a unique number, so when other computers send data on the wire, they can say who it is meant for (imagine each blurb of data starting out like: “yo, I’m sending these next 500 bytes for computer 0A123FBC32, here they come”).

Now the right computer will listen, but it doesn’t know what program the data is for - is it a chunk of a file your browser is downloading? Or the email your email app wants to display? Or perhaps a join request from your buddy’s computer for the Minecraft game you’re hosting?

So in addition to the unique number of the target computer, the data also specifies a “port number”, which tells the computer which of its running programs the data is meant for (programs ask the computer’s operating system: “if any network data arrives on port XY, give it to me”). Some ports have become standards - for example, a program that serves web pages to other computers would typically ask the operating system that any data arriving on the computer that indicates port numbers 80 and 443 should be given to it, and when a web browser wants to fetch a web page, it will send a request to the computer serving the page, defaulting to port 80 o 443.

If you dig deeper, you’ll find that there are even more unique numbers involved and routers/firewalls let data through not only by port number but also by distinguishing between data that is the initial request to another computer’s port number and data that is an answer to an earlier seen request – and more.

Linux Unix since 1979: upon booting, the kernel shall run a single “init” process with unlimited permissions. Said process should be as small and simple as humanly possible and its only duty will be to spawn other, more restricted processes.

Linux since 2010: let’s write an enormous, complex system(d) that does everything from launching processes to maintaining user login sessions to DNS caching to device mounting to running daemons and monitoring daemons. All we need to do is write flawless code with no security issues.

Linux since 2015: We should patch unrelated packages so they send notifications to our humongous system manager whether they’re still running properly. It’s totally fine to make a bridge from a process that accepts data from outside before even logging in and our absolutely secure system manager.

Excuse the cheap systemd trolling, yes, it is actually splitting into several, less-privileged processes, but I do consider the entire design unsound. Not least because it creates a single, large provider of connection points that becomes ever more difficult to replace or create alternatives to (similarly to web standard if only a single browser implementation existed).

Yes, I do that on all my VMs about every 3-5 weeks when it turns itself on again.

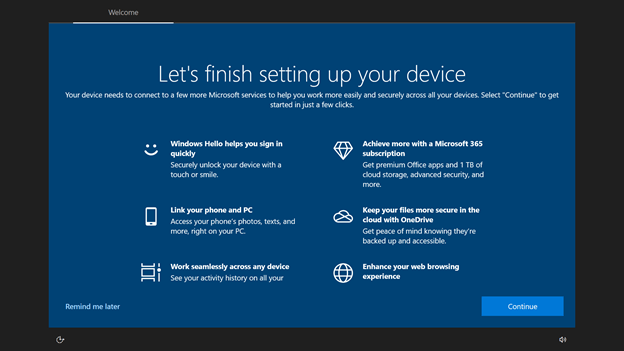

I love the “Let’s finish setting up your device” popup that prevents me from using my VMs regularly.

Like some condescending peddler trying to slam-dunk your agreement as a foregone conclusion.

Come on, buddy, let’s do those remaining tasks, let’s have Microsoft scan your face, tell Microsoft about your phone, let’s go and install those Microsoft apps missing from your phone, and your laptop, too, and then we go buy that Office subscription and have you store your important files on Microsoft’s servers and we really need to get around to switching to Microsoft’s web browser now.

And the only option you get is “Yes” or “Remind me later.”

If you turn it off (and it needs to be turned off in two places), it’ll be back on as soon as Microsoft publishes the tiniest update to any of its unwanted services. Harrghrrr! (artery popping noises)

I have a Windows VM that runs Visual Studio and a small number of developer tools so I can test my code on Windows. And another windows VM that runs Daz3D, Clip Studio Paint and the Epic Launcher (to download stuff from the Unreal Engine Marketplace).

Sometimes I misuse either VM by creating a snapshot and installing Garmin Connect so I can update the music library on my watch :)

SuSE Linux (a German distribution), some niche, single CD distrubution, Debian for a while and, finally, since ~2006, Gentoo on my servers and since ~2015 Gentoo as my desktop.

Debian and its derivatives never felt right for me. I find too many drawbacks with binary packages (non-configurable build options, therefore dependencies that can’t be disabled, relying on humans to keep ABI compatiblity, trouble integrating my own packages or unstable versions) and I just don’t like systemd.

It’s weird, I’ve seen more than enough of those “Install Gentoo” memes, but I find it the most pleasant system to run in the long term.

I’m on OpenRC, so I can’t say anything about systemd, but I have several SSHFS mounts (non-auto) listed in my fstab:

root@192.168.0.123:/random-folder/ /mnt/random-folder fuse noauto,uid=1000,gid=100,allow_other 0 0

Is that similar to what you’ve tried in your fstab? I’d assume replacing noauto with auto should just work, but then again, I haven’t tried it (and rebooting my system right now would be very inconvenient, sorry).

It also might require you to either use password-based login and specify the password or store the SSH keys in the .ssh directory of the user doing the mount (should be root with auto set).

I’m a little put off by the inconvenient command line and the mandatory bells and whistles (flathub is nice and all, but must it be baked into the main executable rather than having the package manager as an optional thing on top?).

So far, AppImage just looks superior to me. Works without installing a runtime into my system, no need to become root and integrate an app into a system-wide managed package repository, I can just run it.

Oh my, thank you very much for pointing that out!

I might have to give it another chance, then, perhaps I’ll shift my games partition back to NTFS once I can free up enough space.

I’m in the same boat. I’ve ended up using Paragon’s commercial ext4 drivers ($20) and while they absolutely work, they’re case sensitive and many Windows apps (especially Bethesda games) open their files with random upper/lowercase spellings that don’t match the files.

I’ve done this (shared 3 NTFS partition in a dual boot setup) from 2017 to the end of 2023 without issues.

The trick was to disable “fast startup” and hibernation. Otherwise Windows happily shuts down with the file systems in an inconsistent state. It’s just a question whether one can live with that in their Windows install.

I’ve used the old ‘ntfs’ driver that supposedly can’t write to… write files ranging from 100,000+ small files in folders to individual 200+ GiB files on NTFS partitions. It works pretty well and I have used it for video editing (few huge files), software development (many tiny files), Unreal Engine + Unity, Linux Gaming w/Steam and more. Rock solid.

After hearing that the ‘ntfs’ driver is supposed to be read-only, I switched to ‘ntfs3’ instead of using ‘ntfs3g’ (same code, but compiled into the kernel instead of running outside via ‘fuse’). From that point onwards, I’ve had major file system corruption nearly every day:

Personally, I’ll never use ‘ntfs3’ for serious work again. But ‘ntfs3g’ is generally considered very stable, maybe my issues are specific to ‘ntfs3’ or my RAID setup (weird nested mdraid thanks to Intel) is to blame.

My final ‘fix’ was to move everything to ext4 and buy Paragon’s $20 ext4 drivers for the dual boot Windows install. It’s only seeing any use once every 2 months. Sadly, these drivers are case sensitive even on Windows, rendering Bethesda games unplayable when installed on those partitions, for example.

Yep. The personal responsibility gambit (or should I say fallacy?).

It was such a clever idea, starting with Coca Cola’s “Litterbug” campaign (where they campaigned against bottle deposits under the guise of wanting “personal responsibility” over “regulations.”)

It’s “up to the consumer” to make the right choices. It just so happens that the meat from decently treated animals is five times more expensive and that you have to drive 100 miles to buy it. Or that being environmentally conscious has been made into a tiring exercise in futility where you constantly have to inconvenience yourself.

As an added bonus, individuals trying to convince other individuals to inconvenience themselves in the same way can be painted as obnoxious, holier-than-thou and insufferable. A real double win for unscrupulous big business.

I don’t know why that comment is collecting downvotes. They are referencing George Orwell’s “Animal Farm.”

Context: “Animal Farm” is a story about how communism can devolve into dictatorship. In the story, the animals on a farm drive out their tyrannical drunkard farmer. They write on the barn wall: “all animals are equal” and live in communist utopia. But some animals, too, hunger for power and status. Rather than overturn the system, they undermine it by adding “…but some animals are more equal than others” to the barn wall, legitimizing a ruling class (themselves) because they are “more equal.”