As a retro enthusiast, I’ve been following this project for a while and it’s been great seeing all the improvements over the years. I recommend checking out this video on its current state: https://youtube.com/watch?v=nWjAxNHXd_8

Equally, or possibly more interesting, is their Ladybird browser, which is cross-platform. Its been making great progress as well, and I sincerely hope that it can compete with the big two some day - would be nice to have a major browser/engine that’s not based on Webkit or Gecko.

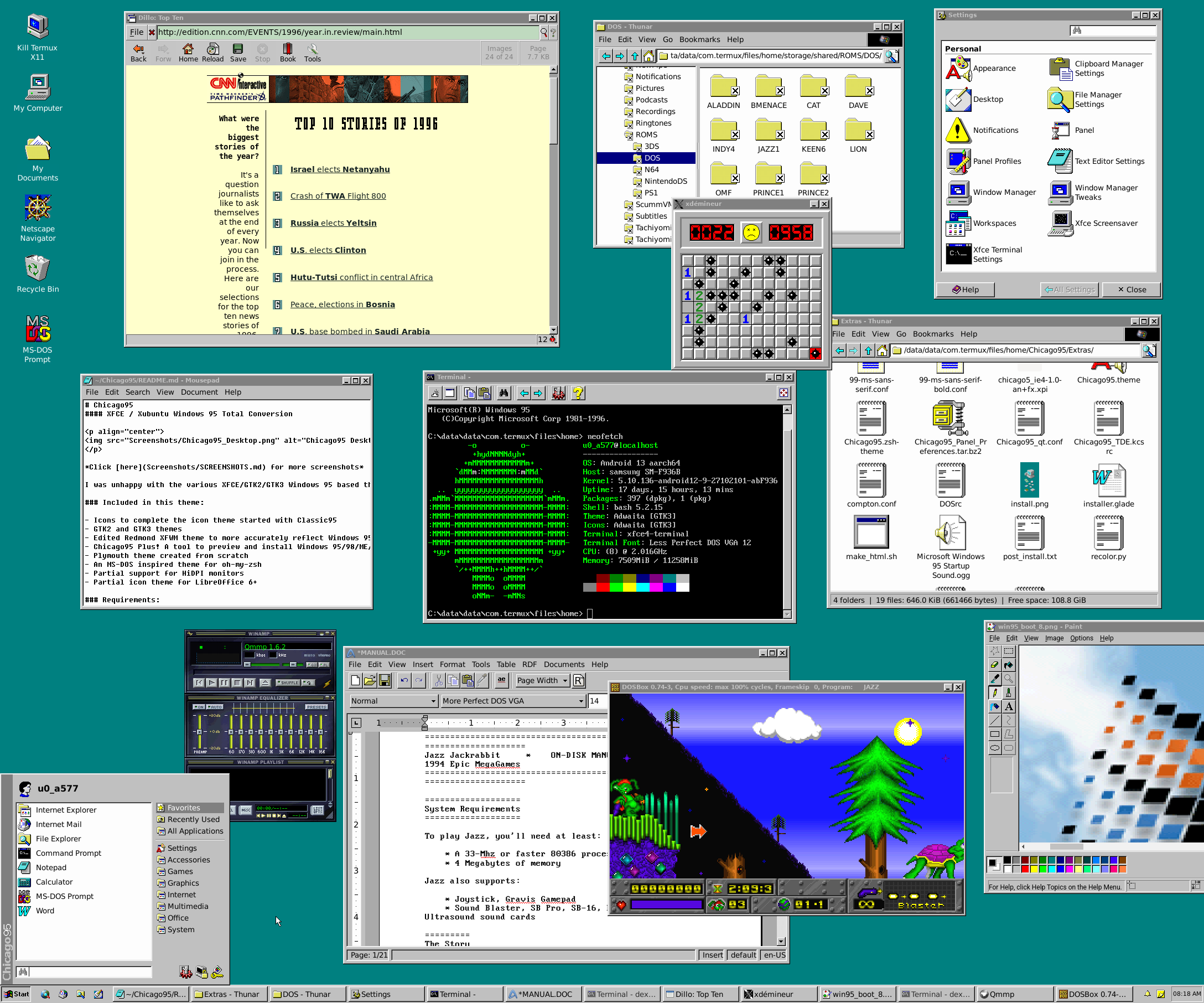

I wish 90s interfaces would make a comeback, I really miss the aesthetics of that era. Luckily there are some excellent themes out there that scratch that itch, like Chicago95 for XFCE - and here’s bonus a screenshot of it running on my Galaxy Fold 4:

:)

Nope. https://reactos.org/wiki/Missing_ReactOS_Functionality